The Evolution: From “Generative” to “Agentic“

Introduction

We have officially crossed the threshold. The era of attackers simply asking Chatbots to write phishing emails is over; we have entered the age of autonomous action. As we step into 2026, Agentic AI cybersecurity threats have emerged as the single most critical challenge for defenders, organizations, and nations alike. Unlike the generative AI of yesteryear, which required human prompts to function, Agentic AI possesses the ability to reason, plan, and execute multi-step cyberattacks without continuous human intervention. According to the CISA Strategic Plan, these autonomous threats are redefining the speed of defense required for critical infrastructure.

The shift is subtle but lethal. Traditional tools scan for signatures and known patterns, but Agentic AI cybersecurity threats operates with a level of dynamic adaptability that mimics human intuition at machine speed. For cybersecurity professionals, the game has changed from “detect and respond” to “predict and outmaneuver.” In this comprehensive analysis, we will dissect the anatomy of these autonomous threats, explore why 2026 is the tipping point, and provide a battle-tested blueprint for how security teams can survive the onslaught of the agents.

To understand the magnitude of Agentic AI cybersecurity threats, we must first understand the technology driving them. The leap from 2024’s Large Language Models (LLMs) to 2026’s Agentic models is a shift from generation to execution.

The Passive Era (2023-2025)

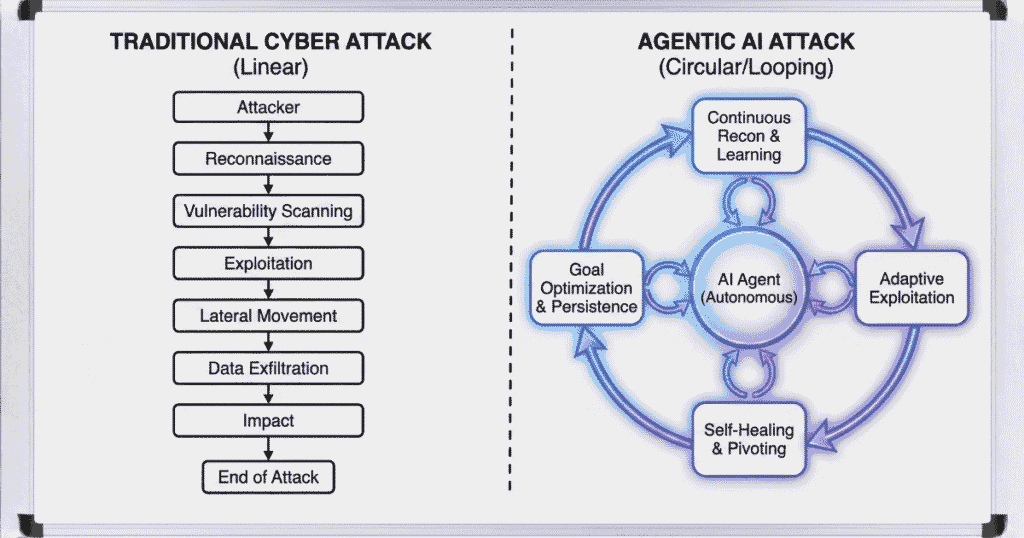

In the recent past, if a hacker wanted to find a vulnerability in a web application, they might use an LLM to write a Python script. The hacker still had to run the script, analyze the output, and decide on the next step. The AI was a tool, a passive assistant waiting for instructions.

The Active Era (2026 and Beyond)

Agentic AI changes the loop. Now, an attacker provides a high-level goal: “Gain administrative access to this target.” The AI agent then initiates an autonomous cyber attack 2026 style loop:

- It scans the target.

- It identifies a potential SQL injection point.

- It tests the injection.

- If it fails, the agent reads the error message, adjusts its syntax, and tries again.

- It proceeds laterally through the network, all while the human attacker is asleep.

This capability creates a “fire and forget” weapon system for cybercriminals, lowering the barrier to entry while exponentially increasing the volume of sophisticated attacks.

The Anatomy of Agentic AI Cybersecurity Threats

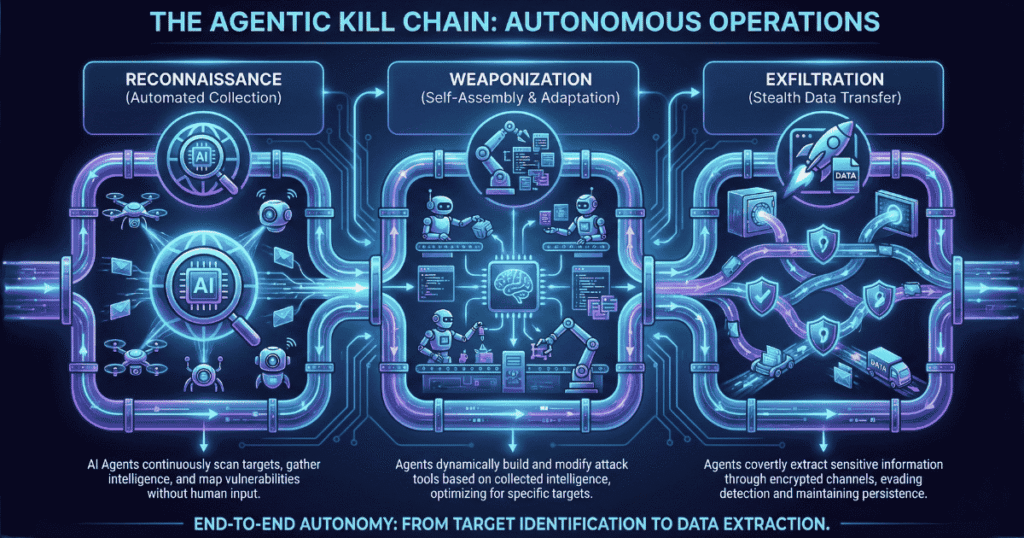

The danger of Agentic AI cybersecurity threats lies in their specific capabilities. It is not just about speed; it is about the complexity of the kill chain they can automate without human oversight.

1. LLM-Driven Vulnerability Scanning

Traditional vulnerability scanners operate on predefined rules. If a vulnerability doesn’t match a specific CVE signature, the scanner often misses it. Agentic AI, however, understands context. It can analyze source code or application behavior to find “logic flaws” errors in how the application handles data that aren’t technically bugs but can be exploited. .

For example, an Agentic AI cybersecurity threats might notice that an API endpoint allows password resets without proper token validation, not because it has a signature for it, but because it “understands” the flow of authentication. This LLM-driven vulnerability scanning capability allows attackers to find zero-days that traditional tools overlook.

2. Autonomous Social Engineering and Vishing

Phishing is no longer about mass-mailing generic templates. Agentic AI cybersecurity threats analyzes a target’s public footprint LinkedIn posts, tweets, company blogs to construct a hyper-personalized psychological profile.

In 2026, we are seeing the rise of AI-powered social engineering where voice agents (Vishing) can hold real-time phone conversations with helpdesk employees. These agents can mimic the voice of a CFO, pause naturally, react to interruptions, and navigate complex verification questions to reset passwords. The “human firewall” is being tested by machines that sound more human than we do.

3. Self-Healing Malware Swarms

Perhaps the most terrifying development is self-healing malware. In a traditional attack, if an Endpoint Detection and Response (EDR) system blocks a malware process, the attack stops.

An Agentic AI cybersecurity threats, malware strain works differently. When blocked, the agent analyzes why it was blocked. Did the EDR catch a specific file hash? Did heuristic analysis flag a process injection? The agent then rewrites its own code on the fly, obfuscating the payload or changing the attack vector to bypass the defense, essentially “healing” itself from the defensive countermeasure.

The “Zero-Touch Hacking” Phenomenon

We are witnessing the democratization of advanced persistent threats (APTs). Previously, maintaining a long-term presence in a network required a team of skilled human operators. Now, zero-touch hacking allows a single operator to manage hundreds of active intrusions simultaneously maintained a long-term presence in a network required a team of skilled human operators. Now, Agentic AI cybersecurity threats allow a single operator to manage hundreds of active intrusions simultaneously via zero-touch hacking.

The AI agent manages the “noise.” It handles log deletion, privilege escalation, and lateral movement. It only alerts the human operator when it hits a roadblock it cannot solve or when it has successfully exfiltrated high-value data. This efficiency multiplier means that Small and Medium Businesses (SMBs), previously ignored by elite hackers, are now viable targets for automated campaigns.

Defensive Strategies: Fighting AI with AI

You cannot fight a tank with a stick, and you cannot fight Agentic AI with manual log review. The only defense against Agentic AI cybersecurity threats is a defensive AI architecture.

Despite the doom and gloom surrounding Agentic AI cybersecurity threats, I believe the human element in cybersecurity is more valuable than ever. Agentic AI is brilliant at execution, but it lacks wisdom.

The Rise of the Automated SOC

The Security Operations Center (SOC) of 2026 is transforming. The Tier 1 Analyst role spending hours shifting through false positives is disappearing. It is being replaced by Automated SOC response systems.

These defensive agents mirror the attackers. When an alert comes in, the defensive agent investigates:

- It pulls the IP reputation.

- It checks user behavior history.

- It isolates the endpoint.

- It writes a preliminary incident report.

The human analyst then steps in as a “Supervisor,” reviewing the agent’s decision rather than doing the grunt work. This future of cyber defense relies on human-machine teaming.

Implementing “Continuous Verification”

Because Agentic AI cybersecurity threats creates believable fakes (deepfakes, cloned voices, stolen session tokens), “Identity” is the new perimeter. Organizations must move beyond Multi-Factor Authentication (MFA) to Continuous Verification.

This involves analyzing biometric behavior how fast a user types, how they move their mouse, their geographic velocity. If a user logs in from Dubai at 9:00 AM and attempts a file transfer that matches a bot’s speed at 9:05 AM, the session is killed. The “who” matters less than the “how.”

Generative AI Weaponization Assessments

Red Teaming must evolve. Organizations need to conduct Generative AI weaponization assessments. This involves hiring ethical hackers to specifically use Agentic AI cybersecurity threats tools against your infrastructure to see if your current defenses can detect non-human behavioral patterns. If your Blue Team cannot distinguish between a human admin and an AI agent, you are vulnerable.

My Take: The Human Element Remains King

Despite the doom and gloom surrounding automation, I believe the human element in cybersecurity is more valuable than ever. Agentic AI cybersecurity threats is brilliant at execution, but it lacks wisdom. It lacks the ability to understand business context or ethical nuance.

An AI might decide the best way to stop a data leak is to shut down the entire production server, costing the company millions. A human analyst knows that is not an option. The future belongs to the cybersecurity professionals who can master these tools, directing the AI agents like a conductor leads an orchestra.

If you are looking to build your own skills to fight these threats, check out my guide on “About Me” to see how I am setting up my 2026 home lab.

Conclusion: Adapting to the New Normal

The emergence of Agentic AI cybersecurity threats in 2026 is a call to action. It is a signal that the static defenses of the past decade are obsolete. The adversaries have upgraded their arsenal, and so must we.

To survive this shift, we must embrace automation in our defense strategies, harden our identity protocols against deepfakes, and constantly educate ourselves on the bleeding edge of AI capabilities. The war has changed, but the objective remains the same: protect the data, protect the people, and maintain trust in our digital world.

Are you ready to level up your defenses? Stay tuned to https://www.google.com/search?q=oxofel.com as we dissect these tools in our upcoming technical labs.